Rachel Greenstadt holds a bachelor’s degree in Computer Science (2001) and master’s degrees in Electrical Engineering and Computer Science (2002) from MIT, as well as a Ph.D. (2007) in Computer Science from Harvard. Her honors have included membership in the DARPA Computer Science Study Group, a U.S. Department of Homeland Security Fellowship, a PET Award for Outstanding Research in Privacy Enhancing Technologies, and a National Science Foundation CAREER Award.

Greenstadt's research has focused on designing more trustworthy intelligent systems — systems that act not only autonomously, but also with integrity, so that they can be trusted with important data and decisions. She takes a highly interdisciplinary approach to this research, incorporating ideas from artificial intelligence, psychology, economics, data privacy, and system security.

Prior to joining NYU, Greenstadt was an Associate Professor of Computer Science at Drexel University, where she ran the highly regarded Privacy, Security, and Automation Laboratory (PSAL) and served as an advisor to the Drexel Women in Computing Society. Before that, she was a Postdoctoral Fellow at Harvard’s School of Engineering and Applied Sciences, a Visiting Scholar with the University of Southern California TEAMCORE group, and a Research Intern at Lawrence Livermore National Laboratory. Throughout her career, she has edited multiple volumes of the journal Proceedings on Privacy Enhancing Technologies (PoPETs) and has been in demand as a peer reviewer. Greenstadt has chaired the ACM Workshop on Artificial Intelligence and Security multiple times and has regularly participated in, spoken at, and served on program committees for several other workshops building ties between the security, AI, and usability communities. She has long been an active speaker and participant in the international hacking community, and her work has been presented at Hacking at Random, Vierhouten, NL, ShmooCon, DefCon, and the Chaos Communication Congress.

Research News

Studying the online deepfake community

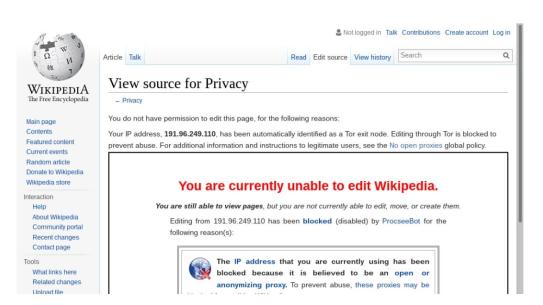

In the evolving landscape of digital manipulation and misinformation, deepfake technology has emerged as a dual-use technology. While the technology has diverse applications in art, science, and industry, its potential for malicious use in areas such as disinformation, identity fraud, and harassment has raised concerns about its dangerous implications. Consequently, a number of deepfake creation communities, including the pioneering r/deepfakes on Reddit, have faced deplatforming measures to mitigate risks.

A noteworthy development unfolded in February 2018, just over a week after the removal of r/deepfakes, as MrDeepFakes (MDF) made its entrance into the online realm. Functioning as a privately owned platform, MDF positioned itself as a community hub, boasting to be the largest online space dedicated to deepfake creation and discussion. Notably, this purported communal role sharply contrasts with the platform's primary function — serving as a host for nonconsensual deepfake pornography.

Researchers at NYU Tandon led by Rachel Greenstadt, Professor of Computer Science and Engineering and a member of the NYU Center for Cybersecurity, undertook an exploration of these two key deepfake communities utilizing a mixed methods approach, combining quantitative and qualitative analysis. The study aimed to uncover patterns of utilization by community members, the prevailing opinions of deepfake creators regarding the technology and its societal perception, and attitudes toward deepfakes as potential vectors of disinformation.

Their analysis, presented in a paper written by lead author and Ph.D. candidate Brian Timmerman, revealed a nuanced understanding of the community dynamics on these boards. Within both MDF and r/deepfakes, the predominant discussions lean towards technical intricacies, with many members expressing a commitment to lawful and ethical practices. However, the primary content produced or requested within these forums were nonconsensual and pornographic deepfakes. Adding to the complexity are facesets that raise concerns, hinting at potential mis- and disinformation implications with politicians, business leaders, religious figures, and news anchors comprising 22.3% of all faceset listings.

In addition to Greenstadt and Timmerman, the research team includes Pulak Mehta, Progga Deb, Kevin Gallagher, Brendan Dolan-Gavitt, and Siddharth Garg.

Timmerman, B., Mehta, P., Deb, P., Gallagher, K., Dolan-Gavitt, B., Garg, S., Greenstadt, R. (2023). Studying the online Deepfake Community. Journal of Online Trust and Safety, 2(1). https://doi.org/10.54501/jots.v2i1.126