Prof. Jiang is known for his contributions to stability and control of interconnected nonlinear systems, and is a key contributor to the nonlinear small-gain theory. His recent research focuses on robust adaptive dynamic programming, learning-based optimal control, nonlinear control, distributed control and optimization, and their applications to computational and systems neuroscience, connected transportation, and cyber-physical-human systems.

Prof. Jiang is a Deputy Editor-in-Chief of the IEEE/CAA Journal of Automatica Sinica and has served as Senior Editor for the IEEE Control Systems Letters (L-CSS), Systems & Control Letters and Journal of Decision and Control, Subject Editor, Associate Editor and/or Guest Editor for several journals including International Journal of Robust and Nonlinear Control, Mathematics of Control, Signals and Systems, IEEE Transactions on Automatic Control, European Journal of Control, and Science China: Information Sciences.

Education

Ecole des Mines de Paris (ParisTech-Mines), France, 1993

Doctor of Philosophy, Automatic Control and Mathematics

University of Paris XI, 1989

Master of Science, Statistics

University of Wuhan, 1988

Bachelor of Science, Mathematics

Experience

New York University

Professor

From: September 2007 to present

Polytechnic University

Associate Professor

From: September 2002 to August 2007

Polytechnic University

Assistant Professor

From: January 1999 to August 2002

Sydney University

Research Fellow

Working with Professor David Hill.

From: May 1996 to May 1998

The Australian National University

Research Fellow

Working with Prof. Iven Mareels

From: May 1994 to April 1996

INRIA, Sophia-Antipolis

Postdoctoral Fellow

The main responsibility was to solve a long-standing open problem related to the attitude control of a spacecraft with only two control inputs, and to develop novel tools and methods for underactuated mechanical systems.

From: October 1993 to April 1994

Publications

Journal Articles

Selected Recent Papers

- L. Cui, B. Pang and Z. P. Jiang, Learning-based adaptive optimal control of linear time-delay systems: A policy iteration approach, IEEE Trans. Automatic Control, Vol. 69, No. 1, pp. 629 - 636, Jan. 2024. DOI: 10.1109/TAC.2023.3273786

- S. Wu, T. Liu, M. Egerstedt and Z. P. Jiang, Quadratic programming for continuous control of safety-critical multi-agent systems under uncertainty, IEEE Transactions on Automatic Control, Vol. 68, No. 11, pp. 6664 - 6679, Nov. 2023.

- P. Zhang, T. Liu and Z. P. Jiang, Tracking control of unicycle mobile robots with event-triggered and self-triggered feedback, IEEE Transactions on Automatic Control, vol. 68, no. 4, pp.2261 - 2276, April 2023.

- T. Bian and Z. P. Jiang, Reinforcement learning and adaptive optimal control for continuous-time nonlinear systems: A value iteration approach, IEEE Trans. Neural Networks and Learning Systems, Vol. 33, No. 7, pp. 2781 - 2790, 2022.

- T. Liu, Z. Qin, Y. Hong, and Z. P. Jiang, Distributed optimization of nonlinear multi-agent systems: A small-gain approach, IEEE Transactions on Automatic Control, vol. 67, no. 2, pp. 676-691, Feb. 2022.

- B. Pang and Z. P. Jiang, Robust reinforcement learning: A case study in linear quadratic regulation, Proc. 35th AAAI Conference on Artificial Intelligence, vol. 35, no. 10, pp. 9303–9311, 2021.

- P. Zhang, T. Liu and Z. P. Jiang, Systematic design of robust event-triggered state and output feedback controllers for uncertain nonholonomic systems, IEEE Transactions on Automatic Control, Vol. 66, No. 1, pp. 213-228, Jan. 2021.

- Z. P. Jiang, T. Bian and W. Gao, Learning-based control: A tutorial and some recent results, Foundations and Trends in Systems and Control, Vol. 8, No. 3, pp 176–284, 2020. (Invited Paper)

- T. Bian, D. Wolpert and Z. P. Jiang, Model-free robust optimal feedback mechanisms of biological motor control, Neural Computation, 32:562-595, Mar. 2020.

- T. Bian and Z. P. Jiang, Continuous-time robust dynamic programming, SIAM J. Control and Optimization, 57 (6), pp. 4150--4174, Dec. 2019.

- W. Gao, J. Gao, K. Ozbay and Z. P. Jiang, Reinforcement-learning-based cooperative adaptive cruise control of buses in the Lincoln Tunnel corridor with time-varying topology, IEEE Transactions on Intelligent Transportation Systems, Vol. 20, No. 10, pp. 3796-3805, Oct. 2019.

- Z. P. Jiang and T. Liu, Small-gain theory for stability and control of dynamical networks: A survey, Annual Reviews in Control, Vol. 46, pp. 58-79, Oct. 2018. (Invited Paper)

- W. Gao, Z. P. Jiang and K. Ozbay, Data-driven adaptive optimal control of connected vehicles, IEEE Transactions on Intelligent Transportation Systems, Vol. 18, No. 5, pp. 1122-1133, 2017.

- W. Gao and Z. P. Jiang, Nonlinear and adaptive suboptimal control of connected vehicles: a global adaptive dynamic programming approach, Journal of Intelligent & Robotic Systems, Vol. 85, pp. 597-611, 2017.

- T. Bian and Z. P. Jiang, Value iteration and adaptive dynamic programming for data-driven adaptive optimal control design, Automatica, vol. 71, pp. 348–-360, Sept. 2016.

- Y. Jiang and Z. P. Jiang, Global adaptive dynamic programming for continuous-time nonlinear systems, IEEE Trans. Automatic Control, Vol. 60, No. 11, pp. 2917-2929, Nov. 2015.

- T. Bian, Y. Jiang and Z. P. Jiang, Decentralized adaptive optimal control of large-scale systems with application to power systems, IEEE Trans. on Industrial Electronics, Vol. 62, pp. 2439-2447, April 2015.

- T. Liu and Z. P. Jiang, A small-gain approach to robust event-triggered control of nonlinear systems, IEEE Trans. on Automatic Control, Vol. 60, No. 8, pp. 2072-2085, Aug. 2015.

- Y. Jiang and Z. P. Jiang, Adaptive dynamic programming as a theory of sensorimotor control, Biological Cybernetics, Vol. 108, pp. 459-473, 2014.

- Z. P. Jiang and T. Liu, Quantized nonlinear control - a survey, Special Issue in Celebration of the 50th Birthday of Acta Automatica Sinica, Acta Automatica Sinica, 39(11): 1820-1830, Nov. 2013. (Invited Paper)

- Z. P. Jiang and Y. Jiang, Robust adaptive dynamic programming for linear and nonlinear systems: An overview, European J. Control, Vol. 19, No. 5, pp. 417-425, 2013.

- T. Liu and Z. P. Jiang, Distributed formation control of nonholonomic mobile robots without global position measurements, Automatica, Vol. 49, pp. 592-600, 2013

Authored/Edited Books

- Stability and Stabilization of Nonlinear Systems. London: Springer-Verlag, June 2011. (with I. Karafyllis)

- Nonlinear Control of Dynamic Networks. CRC Press, Taylor & Francis, April 2014. (with T. Liu and D. J. Hill)

- Robust Adaptive Dynamic Programming. Wiley-IEEE Press, 2017. (with Y. Jiang)

- Nonlinear Control Under Information Constraints. Science Press, Beijing, China, 2018. (with T. Liu)

- Robust Event-Triggered Control of Nonlinear Systems. Springer Nature, June 2020. (with T. Liu and P. Zhang)

- Learning-Based Control. Now Publishers, December 2020.

ISBN: 978-1-68083-752-0 (with T. Bian and W. Gao) - Trends in Nonlinear and Adaptive Control - A tribute to Laurent Praly for his 65th birthday. Springer, London, 2021. (Edited Book with C. Prieur and A. Astolfi)

Affiliations

-

CAN Lab: My Control and Networks (CAN) Lab.mainly focuses on interdisciplinary problems at the interface of AI/machine learning and control (under nonlinear, computing and communications constraints) as well as on optimal feedback mechanisms in movement science.

-

Affiliate Professor, Department of Civil and Urban Engineering, NYU Tandon

-

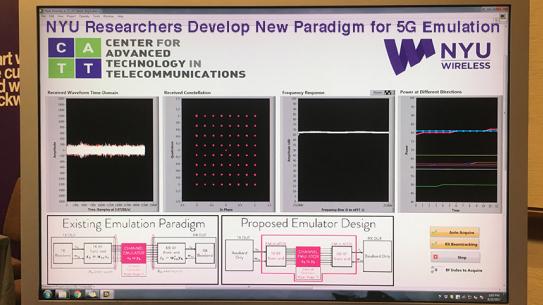

Affiliated member of the Center for Advanced Technology in Telecommunications (CATT)

-

Affiliated member of the Connected Cites for Smart Transportation (C2SMART) Center

Awards

- 2023 Elected to European Academy of Sciences and Arts

- 2023 Stanford's Top 2% Most Highly Cited Scientist

- 2022 Excellence in Research Award from NYU Tandon School of Engineering

- 2022 Fellow of the Asia-Pacific Artificial Intelligence Association

- 2021 Elected Foreign Member of the Academia Europaea (Academy of Europe)

- 2020 "Control Theory and Technology" Best Paper Award

- 2020 IEEE Beijing Chapter Young Author Prize (with Z. Li as the lead author)

- 2018 Clarivate Analytics Highly Cited Researcher

- 2018 Best Paper Award on Control at the IEEE International Conference on Real-Time Computing and Robotics, Kandima, Maldives

- 2017 Best Paper Award at the Asian Control Conference, Gold Coast, Australia

- 2017 Fellow of the Chinese Association of Automation

- 2016 Steve and Rosalind Hsia Biomedical Paper Award at the World Congress on Intelligent Control and Automation

- 2015 Best Conference Paper Award at the IEEE Conference on Information and Automation, Lijiang, China

- 2013 Fellow of the International Federation of Automatic Control

- 2013 Shimemura Young Author Prize (with Yu Jiang as the lead author) at the Asian Control Conference, Istanbul, Turkey

- 2011 Guan Zhao-Zhi Best Paper Award at the Chinese Control Conference

- 2008 Fellow of the Institute of Electrical and Electronics Engineers

- 2008 Best Theoretic Paper Award at the World Congress on Intelligent Control and Automation

- 2009 Changjiang Chair Professorship at Beijing University

- 2007 Distinguished Overseas Chinese Scholar Award from the NNSF of China

- 2005 JSPS Invitation Fellowship from the Japan Society for the Promotion of Science

- 2001 CAREER Award from the US National Science Foundation

- 1998 Queen Elizabeth II Research Fellowship Award from the Australian Research Council

Research News

Robust reinforcement learning: A case study in linear quadratic regulation

This research, whose principal author is Ph.D. student Bo Pang, was directed by Zhong-Ping Jiang, professor in the Department of Electrical and Computer Engineering.

As an important and popular method in reinforcement learning (RL), policy iteration has been widely studied by researchers and utilized in different kinds of real-life applications by practitioners.

Policy iteration involves two steps: policy evaluation and policy improvement. In policy evaluation, a given policy is evaluated based on a scalar performance index. Then this performance index is utilized to generate a new control policy in policy improvement. These two steps are iterated in turn, to find the solution of the RL problem at hand. When all the information involved in this process is exactly known, the convergence to the optimal solution can be provably guaranteed, by exploiting the monotonicity property of the policy improvement step. That is, the performance of the newly generated policy is no worse than that of the given policy in each iteration.

However, in practice policy evaluation or policy improvement can hardly be implemented precisely, because of the existence of various errors, which may be induced by function approximation, state estimation, sensor noise, external disturbance and so on. Therefore, a natural question to ask is: when is a policy iteration algorithm robust to the errors in the learning process? In other words, under what conditions on the errors does the policy iteration still converge to (a neighborhood of) the optimal solution? And how to quantify the size of this neighbourhood?

This paper studies the robustness of reinforcement learning algorithms to errors in the learning process. Specifically, they revisit the benchmark problem of discrete-time linear quadratic regulation (LQR) and study the long-standing open question: Under what conditions is the policy iteration method robustly stable from a dynamical systems perspective?

Using advanced stability results in control theory, they show that policy iteration for LQR is inherently robust to small errors in the learning process and enjoys small-disturbance input-to-state stability: whenever the error in each iteration is bounded and small, the solutions of the policy iteration algorithm are also bounded, and, moreover, enter and stay in a small neighbourhood of the optimal LQR solution. As an application, a novel off-policy optimistic least-squares policy iteration for the LQR problem is proposed, when the system dynamics are subjected to additive stochastic disturbances. The proposed new results in robust reinforcement learning are validated by a numerical example.

This work was supported in part by the U.S. National Science Foundation.

Asymptotic trajectory tracking of autonomous bicycles via backstepping and optimal control

Zhong-Ping Jiang, professor of electrical and computer engineering (ECE) and member of the C2SMART transportation research center at NYU Tandon, directed this research. Leilei Cui, a Ph.D. student in the ECE Department is lead author. Zhengyou Zhang and Shuai Wang from Tencent are co-authors.

This paper studies the trajectory tracking and balance control problem for an autonomous bicycle — one that is ridden like a normal bicycle before automatically traveling by itself to the next user — that is a non-minimum phase, strongly nonlinear system.

As compared with most existing methods dealing only with approximate trajectory tracking, this paper solves a longstanding open problem in bicycle control: how to develop a constructive design to achieve asymptotic trajectory tracking with balance. The crucial strategy is to view the controlled bicycle dynamics from an interconnected system perspective.

More specifically, the nonlinear dynamics of the autonomous bicycle is decomposed into two interconnected subsystems: a tracking subsystem and a balancing subsystem. For the tracking subsystem, the popular backstepping approach is applied to determine the propulsive force of the bicycle. For the balancing subsystem, optimal control is applied to determine the steering angular velocity of the handlebar in order to balance the bicycle and align the bicycle with the desired yaw angle. In order to tackle the strong coupling between the tracking and the balancing systems, the small-gain technique is applied for the first time to prove the asymptotic stability of the closed-loop bicycle system. Finally, the efficacy of the proposed exact trajectory tracking control methodology is validated by numerical simulations (see the video).

"Our contribution to this field is principally at the level of new theoretical development," said Jiang, adding that the key challenge is in the bicycle's inherent instability and more degrees of freedom than the number of controllers. "Although the bicycle looks simple, it is much more difficult to control than driving a car because riding a bike needs to simultaneously track a trajectory and balance the body of the bike. So a new theory is needed for the design of an AI-based, universal controller." He said the work holds great potential for developing control architectures for complex systems beyond bicycles.

The work was done under the aegis of the Control and Network (CAN) Lab led by Jiang, which consists of about 10 people and focuses on the development of fundamental principles and tools for the stability analysis and control of nonlinear dynamical networks, with applications to information, mechanical and biological systems.

The research was funded by the National Science Foundation (Grant number 10.13039/100000001).

RAPID: High-resolution agent-based modeling of COVID-19 spreading in a small town

COVID 19 has wreaked havoc across the planet. As of January 1, 2021, the WHO has reported nearly 82 million cases globally, with over 1.8 million deaths. In the face of this upheaval, public health authorities and the general population are striving to achieve a balance between safety and normalcy. The uncertainty and novelty of the current conditions call for the development of theory and simulation tools that could offer a fine resolution of multiple strata of society while supporting the evaluation of “what-if” scenarios.

The research team led by Maurizio Porfiri proposes an agent-based modeling platform to simulate the spreading of COVID-19 in small towns and cities. The platform is developed at the resolution of a single individual, and demonstrated for the city of New Rochelle, NY — one of the first outbreaks registered in the United States. The researchers used New Rochelle not only because of its place in the COVID timeline, but because agent-based modelling for mid-size towns are relatively unexplored despite the U.S. being largely composed of small towns.

Supported by expert knowledge and informed by officially reported COVID-19 data, the model incorporates detailed elements of the spreading within a statistically realistic population. Along with pertinent functionality such as testing, treatment, and vaccination options, the model also accounts for the burden of other illnesses with symptoms similar to COVID-19. Unique to the model is the possibility to explore different testing approaches — in hospitals or drive-through facilities— and vaccination strategies that could prioritize vulnerable groups. Decision making by public authorities could benefit from the model, for its fine-grain resolution, open-source nature, and wide range of features.

The study had some stark conclusions. One example: the results suggest that prioritizing vaccination of high-risk individuals has a marginal effect on the count of COVID-19 deaths. To obtain significant improvements, a very large fraction of the town population should, in fact, be vaccinated. Importantly, the benefits of the restrictive measures in place during the first wave greatly surpass those from any of these selective vaccination scenarios. Even with a vaccine available, social distancing, protective measures, and mobility restrictions will still key tools to fight COVID-19.

The research team included Zhong-Ping Jiang, professor of electrical and computer engineering; post-docs Agnieszka Truszkowska, who led the implementation of the computational framework for the project, and Brandon Behring; graduate student Jalil Hasanyan; as well as Lorenzo Zino from the University of Groningen, Sachit Butail from Southern Illinois University, Emanuele Caroppo from the Università Cattolica del Sacro Cuore, and Alessandro Rizzo from Turin Polytechnic. The work was partially supported by National Science Foundation (CMMI1561134 and CMMI-2027990), Compagnia di San Paolo, MAECI (“Mac2Mic”), the European Research Council, and the Netherlands Organisation for Scientific Research.